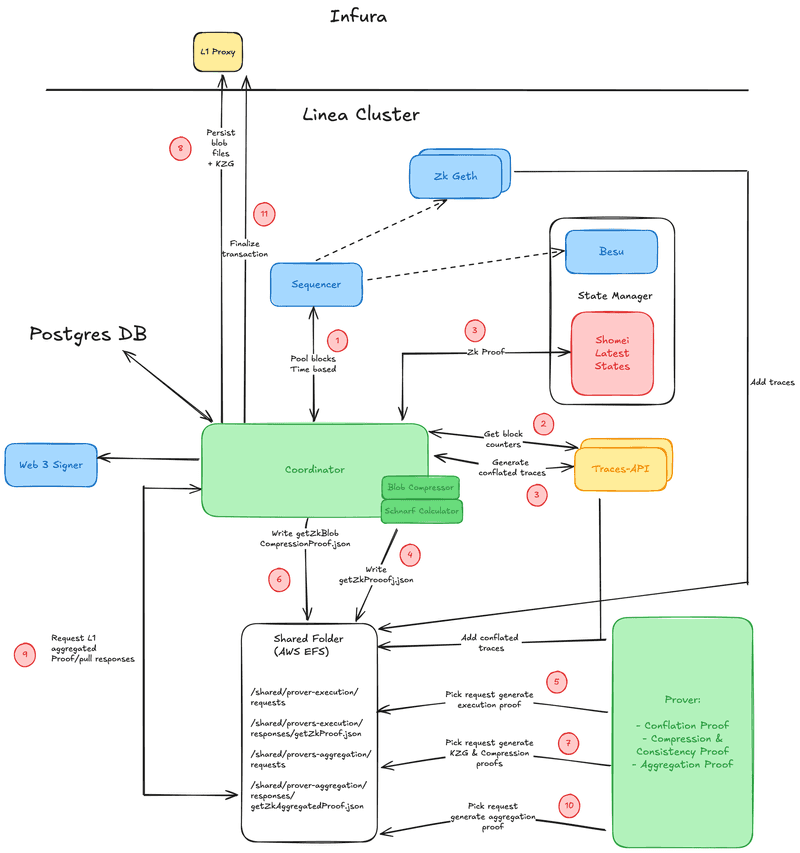

Linea Architecture Description

Linea architecture description

Transaction Execution and Management

-

The objective of the Linea Network is to provide functionality of Ethereum Network at a fraction of the cost of Ethereum mainnet while providing guarantees on the correctness of its state.

-

Transaction execution is done using Clique consensus protocol and correctness is guaranteed by providing proof of validity using zk-proofs

-

The main components of Linea are

- The sequencer which plays the role of signer of the Clique protocol and creates blocks

- The state manager for all L2 states which is used to store Linea state in a way that makes it easy to generate data used as inputs for zk-proof creation.

- The coordinator which orchestrates the different steps to create zk-proofs and persists them in the L1 Ethereum network. In particular, it is responsible for conflated batch creation blob creation and aggregation.

- The traces-APIs which provide trace counts and generate conflated trace files to be used for zk-proof creation

- The provers which generate zk-proof for conflated blocks, kzg commitment and compression proof of the blobs, as well as aggregated proof for multiple conflation and compression proofs.

File System

- A file system is shared across multiple processes. It is used to persistent queue.

- The file system is used to store files for

- Block traces

- Conflated-traces of batches

- Prover request

- Proof responses

- A script automatically removes all files that are more than one week old.

Sequencer

- There is a unique instance of Sequencer. It is special instance of consensus client based on Besu.

- The consensus protocol is used in Clique.

- The sequencer receives the transactions pre-validated by the Ethereum nodes in order to execute them.

- It arranges and combines the transactions into blocks.

- Blocks produced by the sequencer, in addition to common verifications, must fulfill the below requirements

- Trace counts are small enough to ensure the prover can prove the block

- The block total gas usage is below the

gasLimitparameter - And for each transaction

- gas fees are above

minMinableGasPrice - They are profitable i.e. the ratio between gas fees and compressed transaction size is good enough.

- Neither

fromnortofields of the transaction correspond to a blacklisted user. callDatasize is below the configured limitgasLimitis below the configured limit

- gas fees are above

- Transactions exceeding trace limits are added to an

unexecutableTxListin-memory to avoid reconsidering them. - Similarly transactions that take too long to be processed by the sequencer are added to the unexecutable list.

- Transactions from unexecutable list are removed from the pool.

- Priority transactions are prioritized over normal ones.

- Priority transactions are those sent by a user whose address is in predefined list.

- It typically corresponds to the transactions triggered by the Linea system.

- Note that if no transactions are received within the block window, no block is generated.

- This behavior differs from Ethereum mainnet, where empty blocks are still produced to maintain the chain continuity and prevent certain attacks.

- If a transaction could not be included in the current block, it will remain as a candidate for inclusion in the next block.

- Endpoints are exposed by the sequencer and used in the flow are:

eth_blockNumber():

blockNumber stringeth_getBlockByNumber(

blockNumber string

Transaction_detail_flag boolean

)State Manager

For L1 finalized states

- The state manager is configured differently based on the role it serves.

- The node described in this section is configured to serve the

linea_getProofmethod, and has a different configuration than the one described in State manager - for L2 states.

- The publicly exposed method

linea_getProofis served by the state manager. - We have a single instance of state manager.

- The state manager keeps its representation of the Linea state by applying the block transactions computed by the sequencer, which it receives through P2P.

- It is composed of two parts

- A Besu node with a Shomei plugin

- A Shomei node

- The Besu node is connected to other Ethereum nodes using P2P, and from the blocks it receives, it generates a trielog which it sends to Shomei node.

- The trielog is a list of state modifications that occurred during the execution of the block.

- As soon as Shomei receives this trielog it will apply these changes to its state.

- The Shomei nodes use Sparse Merkle Trees to represent Linea's state.

- Besu and Shomei nodes know each other's IP and port of direct communication.

- The Shomei plugin implements the RPC endpoint to return rollup proofs.

- Rollup proofs are used to provide Merkle proof of the existence of a key in a given block number of specific address.

- This proof can be used onchain in the Ethereum L1 for the blocks that have been finalized given their root hashes are present on chain.

- This state manager instance, which is configured to handle the

linea_getProofrequests is configured to store only the states corresponding to the L2 blocks finalized on L1.

- To serve the

linea_getProofrequests, the Shomei instance needs to know the latest L2 block finalized on L1. - It is pushed by the coordinator to Shomei, through a dedicated RPC endpoint.

- The IP address and port of Shomei are set in the coordinator configuration, allowing direct communication

- The endpoint

linea_getProofis as follows

linea_getProof(

address string

key List[string]

blockNumber string

):

result: {

accountProof MerkleProof

storageProofs List[MerkleProof[^1]]

}For latest L2 States

- This component is identical to the one described in State manager - for L1 finalized states except that it configured to compute and keep the state of each L2 block.

- The IP address of Shomei is set in the coordinator configuration, allowing direct communication.

- The coordinator calls it to get proofs of state changes that are used as input for the prover.

- Endpoints exposed by state manager and used in the flow are

rollup_getZkEVMStateMerkleProofV0(

startBlockNumber int

endBlockNumber int

zkStateManagerVersion string

):

zkParentStateRootHash string

zkStateMerkleProof StateMerkleProof[^1]

zkStateManagerVersion stringCoordinator

-

The coordinator is responsible for

- deciding how many blocks can be conflated into a batch

- deciding when to create a block

- deciding when an aggregation is generated

- posting proofs and data to L1

-

For this, it gets the blocks from the sequencer using JSON-RPC

eth_blockNumberandeth_getBlockByNumbermethods. This is done everyblock timeseconds.

Batches

- The coordinator has a candidate list of blocks to create the next batch.

- For every new block, the coordinator checks if it can be added to the list while respecting the batch requirements (data size and line count limits).

- If a block cannot be added, a new batch is created from the candidate list, and a new list is started containing the new block.

- When the coordinator creates a batch of blocks, it must ensure that:

- The data of all blocks of batch can fit in a single blob or the call data of a single transaction limit.

- Each batch can be proven in a conflation-proof (trace counts limit)

- For each block, the coordinator gets the trace counts by calling a service implementing traces api

linea_getBlockTracesCountersV2JSON-RPC method.

- It checks for the total compressed size of the transactions in the batch under construction using the compressor library

canAppendBlockmethod of the compressor.

- In case of low activity, the coordinator triggers batch creation after some time out.

- Upon batch creation, the coordinator gathers the inputs to create an execution proof request, namely: the conflated traces using

linea_generateConflatedTracesToFileV2, and a Merkle proof for the change of state between the first and the last block of the batch usingrollup_getZkEVMStateMerkleProofV0

- The coordinator collects all L2-L1 requests to be included in the batch looking at all logs emitted by

l2MessageServiceAddressfor each block in the batch. - The coordinator also collects L1-L2 anchoring message event logs.

- The coordinator creates an execution proof request containing all the information required to generate the proof.

Blobs

- The coordinator creates blobs by combining multiple contiguous batches.

- Upon creation of a blob, the coordinator must ensure that the total compressed size of the batches constituting it can fit in an EIP4844 blob (the size has to be at most 128KB, current limit being 127 KB).

- To ensure profitability, the coordinator aims at fitting as much data in a blob as possible, hence it considers the size left in a blob while building batches.

- Dictated by constraints on the decompression prover, the plain size of batches on a blob must also add up to no more than around 800KB.

- If a batch cannot be added to the current, a blob is created, and a new blob construction is initiated with the last batch.

- For every blob, the coordinator creates a KZG consistency proof and a compression proof request.

- Once the proofs are generated, the coordinator sends the blob to L1 with the KZG proofs using blob storage.

- Once the blob has been generated, the coordinator retrieves a state change merkle proof from the state manager using

rollup_getZkEVMStateMerkleProofV0.

- It then calculates its shard using go calculate shard library.

- Finally it creates a proof request file for a KZG and a compression proof. This request file contains all the required data to generate the proof.

- The coordinator is responsible for ensuring that all data required by the prover is available before creating the request.

- Once the KZG proof is generated, the coordinator sends a transaction to L1

LineaServicewith the blob and this proof as described in L1 finalization. - In addition, it sends merkle tree roots of merkle trees storing L1L2 and L2L1 messages.

Aggregation

- When sufficiently many batches and blobs have been generated, or after some maximum elapsed time, the coordinator creates a request file for the prover to generate aggregation proof.

- To build a request file, the coordinator needs information on the parent of the first block, its timestamp, the last L1-L2 message number and rolling hash anchored in this parent block.

- It gets this information using

L2MessageService.lastAnchoredL1MessageNumber and L2MessageService.l1RollingHashes- The coordinator ensures that all data required by the prover is available before creating the request.

Finalization

- Once the aggregation proof is available and after some delay the coordinator sends a finalization transaction to the L1 LineaService smart contract as described in L1 finalization.

- The smart contract verifies the caller entitlement, its solvability, the proof validity and then executes the L2 root hash state change.

Provers

-

There are three types of proofs:

- Execution

- Compression

- Aggregation

-

The proving flow is identical for all of them.

-

Each prover can generate any of the three types of proofs.

- The number of instances of prove is dynamic and driven by the number of proof requests in the file system.

- There is one instance of large prover running to handle requests that could not be handled by the regular prover instance.

- In testnet, but not in the main Linea layer, there are instances of provers that generate shorter proofs.

- The regular provers generate proofs with standard memory settings.

- The large prover also generates full proof, the difference is that it is configured to have 1TB of memory.

- They are used to deal with proofs that unexpectedly cannot be handled by the default fill prover.

- The proof sizes are estimated to be in the order of 2MB to 15MB.

- The prover encapsulates two processes

- One long running that monitors the file system and picks up proof requests

- Another short running one which is instantiated when a request is picked up by the first process.

- The long running process monitor triggers and monitors the short running process.

- It allows capturing unexpected issues occuring during the proving.

- The short running process is further made up of two internal components : the traces expander (aka Corset) and the prover itself.

- Corset is responsible for expanding execution traces into a format that prover can use before building the proof.

- The proof relies on the gnark library for zk-SNARKs implementation.

- Corset is hosted inside the same process as the short running component of the prover.

- Traces are expanded by Corset in memory and ingested by the prover directly, without the need for intermediate files to be sent over the network.

- This was motivated by the following reasons:

- Simplification of architecture overall, with one less component to manage/maintain

- Expanded traces is the largest file/chunk of data in the system, 100-500MB gzipped atm,

- No need to send large files over the network

- No need to decompress/deserialize expanded traces in the prover, which can be heavy when traces are large ~5GB;

- Reduce probability of incompatibility between Corset/Prover versions and their input/output formats

Execution Proofs

- It validates the correct execution of transactions within the Ethereum Virtual Machine (EVM) .

- The proof system for the execution has a complex structure which involves the Vortex proof system, GKR, and PLONK.

- The final proof takes the form of BLS12-377-based PLONK proof

- An execution request proof file is stored in the shared filesystem under the repository: with file name pattern:

$startBlockNumber-$endBlockNumber-etv$traceVersion-stv$stateManagerVersion-getZkProof.json - Requests folder name

/shared/prover-aggregation/requests - Responses folder name

/shared/prover-aggregation/responses

- Compression proof response file format

BlobCompressionProofJsonRequest

eip4844Enabled boolean

compressedData string // base64 encoded

dataParentHash string

conflationOrder ConflationOrder

parentStateRootHash string // hex encoded

finalStateRootHash string // hex encoded

prevShnarf stringConflationOrder

startingBlockNumber int

upperBoundaries List[int]- Compression proof response file format

BlobCompressionProofJsonResponse

eip4844Enabled boolean

dataHash string

compressedData string // kzg4844.Blob [131072]byte. The data that are explicitly sent in the blob (i.e. after compression)

commitment string // kzg4844.Commitment [48]byte

kzgProofContract string

kzgProofSidecar string

expectedX string

expectedY string

snarkHash string

conflationOrder ConflationOrder

parentStateRootHash string

finalStateRootHash string

parentDataHash string

expectedShnarf string

prevShnarf stringAggregation Proof

- Serves as the cornerstore of Linea's proof system, recursively verifying proofs from N execution circuits and M compression circuit instances.

- This circuit encapsulates the primary statement of Linea's prover and is the sole circuit subject to external verification.

- The proof system used is a combination of several PLONK circuits on BW6, BLS12-377 and BN254 which tactically profits from the 2-chained curves BLS12-377 and BW6 to efficiently recurse the proofs.

- The final proof takes form of BN254 curve that can be efficiently verified on Ethereum thanks to the available precompiles

L2 Message Service

- This is the L2 smart contract messaging service used to anchor messages submitted to L1.

- It is responsible for ensuring that messages are claimed at most once.

- Methods

lastAnchoredL1MessageNumber():

lastAnchoredL1MessageNumber uint256l1RollingHashes(

messageNumber uint256

):

rollingHash bytes32L1-L2 Interactions

- There are three types of integration

- Block finalization, specific to Linea, is used to persist on L1 the state changes happening on L2.

- This happens in two steps, first blob data and KZG proofs are persisted on L1, and then aggregation proofs are sent to L1

- L1 -> L2 typically used to transfer funds from L1 to L2

- L2 -> L1 to retrieve funds from L2 back to L1.

- Block finalization, specific to Linea, is used to persist on L1 the state changes happening on L2.

L1 Finalization

Blobs

- The coordinator submits up to six blobs it generates at once to L1 using eip4844 standard to

v3.1 LineaRollup.submitBlobsalongside the KZG proof. - The LineaRollup smart contract verifies the validity of the proofs for the given blob of data.

- Blob submission can support sending up to siz blobss at once.

- This allows for saving cost by amortizing the processing overhead over multiple blobs

submitBlobs(

blobSubmissionData BlobSubmissionData[]

parentShnarf bytes32

finalBlobShnarf bytes32

)Where

BlobSubmissionData

dataEvaluationClaim uint256

kzgCommitment bytes

kzgProof bytes

finalStateRootHash bytes32

snarkHash bytes32Aggregation

- L1 finalization is triggered by the coordinator once an aggregation proof has been generated.

- This is done by trigerring a transaction to execute

LineaService.finalizeBlocksmethod on L1. - In the process, the aggregation proof is sent to L1.

- Once the transcation is completed on L1, all the blocks are finalized on L2.

- The interface use is described below labeled with their respective Linea release version

finalizeBlocks(

aggregatedProof bytes

proofType uint256

finalizationData FinalizationDataV3

)where

FinalizationDataV3

parentStateRootHash bytes32;

endBlockNumber uint256;

shnarfData ShnarfData;

lastFinalizedTimestamp uint256;

finalTimestamp uint256;

lastFinalizedL1RollingHash bytes32;

bytes32 l1RollingHash;

uint256 lastFinalizedL1RollingHashMessageNumber;

uint256 l1RollingHashMessageNumber;

uint256 l2MerkleTreesDepth;

bytes32[] l2MerkleRoots;

bytes l2MessagingBlocksOffsets;L1 -> L2 Messages

- Cross-chain operations happen when a user triggers transcation by calling the

L1MessageService.sendMessagemethod of message service smart contract, where L1MessageService is inherited by the deployed LineaRollup smart contract.

sendMessage(

to String

fee uint256

calldata bytes

)- Optional ETH value to be transferred can be set when calling the above method.

- When such a transaction is executed on L1, it triggers the publication of a message in the chain log of events, and the user pays for gas related to the L1 transaction execution.

- Internally, the message service computes a rolling hash. It is computed recursively as the hash of the previous rolling hash and the new message hash.

- Linea's coordinator, which is subscribing to L1 events, detects the L1 finalized cross-chain MessageSend event and anchors it on L2.

- The coordinator anchors the messages by batches.

- The coordinator anchors the messages by batches.

- Anchoring (Anchoring is a process of placing cross-chain validity reference that must exist for any message to be claimed) of messages is done through the executed

anchorL1L2MessageHasheswhcih is inherited by theL2MessageServicesmart contract.

- To anchor message, the coordinator collects all L1-L2 message message logs from which it gets the message hash, the rolling hash, the nonce and the L1 block number.

- Note that the L1 block number facilitates the processing but is not anchored on L2.

- Filtering takes place beforehand to exclude anchored messages by lookin on L2 at the

L2MessageService.inboxL1L2MessageStatusfor each hash to see if it is already anchored.

anchorL1L2MessageHashes(

messageHashes bytes32[]

startingMessageNumber uint256

finalMessageNumber uint256

finalRollingHash bytes32

)- The rolling hash passed as parameter to this method serves to perform a soft validation that the messages anchored on L2 lead to the expected rolling hash computed on L1.

- It is used to detect incosistency early, but it does not provide provable guarantees. The full benefit of the rolling hash is only really realized on L1 finalization as part of the feedback loop.

- Checking that the rolling hash and message number match, ensures that the anchoring is censorship and tamper-resistant.

- Once the message is anchored on L2, it can be claimed. Claims can be triggered via a Postman or manually.

- The coordinator is the only operator entitled to anchor messages on L2.

- Triggering via the Postman service will happen if the initial user asks for it and has prepaid the estimated fees.

- In this case, if the prepaid fees match the Postman service expectations, it executes the transaction to call the L2 Service Message claimMessage method and the Postman service receives the prepaid estimated fees on L2.

- Additionally, should the cost to execute the delivery be less than the fee paid, and the difference is cost-effective to send to the message recipient, the difference is transferred to the destination user.

- Claim can also be done manually. In this case, a user will trigger the claim transaction using L2 Service message and pay for the gas fees.

claimMessage(

from address

to address

fee uint256

value uint256

feeRecipient address

calldata bytes

nonce uint256

)

- When a message is claimed, an event is emitted using:

emit MessageClaimed(messageHash)- On finalization the value of the final (last in rollup data being finalized) RollingHashUpdated event on the L2 Service Message is validated against the expected value on L1 exists.

- A successful finalization guarantees that no message can be claimed on L2 that did not exist on L1, and that no messages were excluded on anchoring message hashes.