Python Memory Management

One can think of computer's memory as an empty book intended for short stories. There is nothing written on pages yet. Eventually, different authors will come along. Each author wants some space to write their story in.

Since they aren't allowed to write over each other, they must be careful about which pages they write in. Before they begin writing, they consult the manager of the book. The manager then decides where in the book they are allowed to write.

Since this book is around for a long time, many of the stores in it are no longer relevant. When no one reads or references the stories, they are removed to make room for new stories.

In essence, computer memory is like that empty book. In fact, it is common to call fixed-length contiguous block of memory pages, so this analogy holds pretty well.

The authors are like different applications or processes that need to store data in memory. The manager, who decides where the authhors can write in the book, plays the role of memory manager of sorts. The persom who removed the old stories to make room for new stories is like the garbage collector.

From Hardware to Software

Memory management is the process by which applications read and write data. A memory manager determines where to put an application's data. Since there is fifnit chunk of memory, like the pages in our book analogy, the managner has to find some free space and provide it to application. This process of providing memory is generally called memory allocation.

On the flip side, when data is no longer needed, it is removed from memory. But freed to where ? Where did this memory come from ?

Somewhere in your computer, there is a physical device storing data when you are running your python programs. There are many layers of abstraction that the Python code goes through befire the object actually get to the hardware though.

Above the OS, there are applications one of which is default Python implementation. Memory management for your Python code is handled by the Python application. The algorithms and structures that the Python application uses for memory management is the focus of this article.

The Default Python Implementation

The default Python implementation, CPython, is actually written in C programming language.

When I first heard this, it blew my mind. I was like, "How can a programming language be written in another programming language ?"

The Python language is defined in a reference manual written in English. However, that manual isn't al. that useful by itself. You still need something to interpret written code based on the rules in the manual.

You also need something to actually execute interpreted code on a computer. The default Python implmentation, fulfills both those requirements. It converts your Python code into instructions that it then runs on the virtual machine.

Python is an interpreted programming language. Your Python code actually gets compiled tdown to more readable instructions called bytecode. These instructions get interpreted by the virtual machine when you run your code.

Have you ever seen a .pyc file ? That's the bytecode for your Python code that gets interpreted by the virtual machine.

It is important to note that there are implementations of other than CPython. IronPython compiles down to run on Microsoft's Common Language Runtime. Jython compiles down to run on Java Virtual Machine. PyPy is a just-in-time compiler for Python.

For the purposes of this article, we will focus on CPython.

Okay, so CPython is written in C, and it interprets Python bytecode. What does this have to do with memory management ? Well, memory management algorithms and structures exist in CPython code, in C. To understand the memory management of Python, you have to get basic understanding of CPython itself.

CPython is written in C, which does not natively support object-oriented programming. Because of that, there are quite a bit of interesting designs in CPython code.

You may have heard that everything in Python is an object, even types such as int and str. This is true. In CPython, these types are implemented as structures. There is a struct called PyObject which every other object in CPython uses.

The PyObject, the grand-daddy of all objects in Python, contains only two things:

obj_refcntwhich is the reference count of the object.obj_typewhich is the type of the object.

The reference count is used for garbage collection. Then you have a pointer to the actual object type. That object type is just another struct that describes the Python object.

Each object has its own object specific memory allocator that knows how to get the memory to store that object. Each object also has an object specific memory deallocator that knows how to free the memory when the object is destroyed.

However, there is an important factor in all this talk about allocating and freeing memory. Memory is a shared resource on the computer. Bad things can happen if two different processses try to write to the same memory location at the same time.

The Global Interpreter Lock

The GIL is a solution to the common problem of dealing with shared resources, like memory in computer. When two threads try to modify the same resource at the same time, they can step on each other's toes. The end result can be garbled mess where neither of the threads end up with what they wanted.

Consider the book analogy. Suppose that two authors stubbornly decide that its their turn to write. Not only that, but they both need to write on the same page of the book at the same time.

They ignore the other's attempt to craft a story and begin writing on the page. The end result is two stories on top of each other., which makes the whole page completely unreadable.

One solution to this problem is a single, global lock on the interpreter when a thread is interracting with the shared resource. In other words, only one author can write at a time.

Python's GIL accomplishes this by locking the entire interpreter meaning that its not possible for another thread to step on the current one. When CPython handles memory, it uses the GIL to ensure that it does so safely.

Garbage Collection

Let's revisit the book analogy and assume that some of the stories in the book are getting very old. No one is reading or referencing those stories anymore. If no one is reading something or referencing it in their own work, you could get rid of it to make room for new writing.

That old, unreferenced writing could be compared to an object in Python whose reference count has dropped to 0. Remember that every object has a reference count and a pointer to a type. The reference count gets increased for a few different reasons. For example, the reference count will increase if you assign it to annother variableL

numbers = [1, 2, 3]

# reference count of [1, 2, 3] is 1

more_numbers = numbers

# reference count of [1, 2, 3] is now 2It will also increase if you pass it to a function or method.

total = sum(numbers)As a final example, the reference count will increase if you include the object in the list:

matrix = [numbers, numbers, numbers]Python allows you to inpsect the current reference count of an object with the sys module. You can use the sys.getrefcount() function to get the reference count of an object.

In any case, if the object is still required to hang around in your code, its reference count is greater than 0. Once it drops to 0, the object has a specific deallocation function that is called which “frees” the memory so that other objects can use it.

But what does it mean to “free” the memory, and how do other objects use it? Let’s jump right into CPython’s memory management.

CPython's Memory Management

We are going to dive deep into CPython's memory architecture and algorithms, so buckle up.

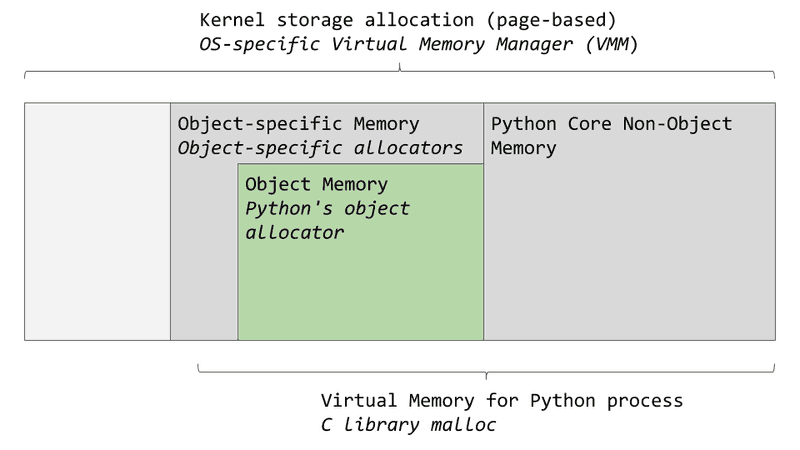

As mentioned before, there are layers of abstraction from Physical Hardware to CPython. The operating system abstracts the physical memory and creates a virtual memory layer that applications (including Python) can access.

An OS-specific virutal memory manager carves out a chunk of memory for the Python process. The darker gray boxes in the image below are now owned by the Python process.

Python uses a portion of the memory for internal use and non-object memory. The other portionn is dedicated to object storage.

CPython has an object allocator that is responsible for allocating memory within the object memory area. This object allocator is where most of the magic happens. It gets called every time a new object needs space allocated or deleted.

Typically, the adding an removing of data for Python objects like list and int does not involve too much data at a time. So the design of the allocator is tuned to work well with small amounts of data at a time. It also tries not to allocate memory until it is absolutely required.

The comments in theh source code describe the allocator as "a fast, special-purpose memory allocator for small blocks, to be used on top of a general purpose malloc." In this case, malloc is C's library function for memory allocation.

Now we'll look at CPython's object allocator in more detail. First we will talk about the 3 main pieces and how they relate to each other.

Arenas are the largest chunk of memory and are aligned on a page boundary in memory. A page boundary is the edge of a fixed length contiguous chunk of memory that the OS uses. Python assumes the system's page size is 256 kilobytes.

Within arenas aare pools, which are one virtual memory page (5 kilobytes). These are like the pages in our book analogy. These pools are fragmented into smaller blocks of memory.

All the blocks in a given pool are of the same "size class". A size class defines a specific block size, given some amount of requested data. The chart below is taken directly from source code.

| Request in bytes | Size of allocated block | Size class idx |

|---|---|---|

| 1-8 | 8 | 0 |

| 9-16 | 16 | 1 |

| 17-24 | 24 | 2 |

| 25-32 | 32 | 3 |

| 33-40 | 40 | 4 |

| 41-48 | 48 | 5 |

| 49-56 | 56 | 6 |

| 57-64 | 64 | 7 |

| 65-72 | 72 | 8 |

| ... | ... | ... |

| 497-504 | 504 | 62 |

Pools

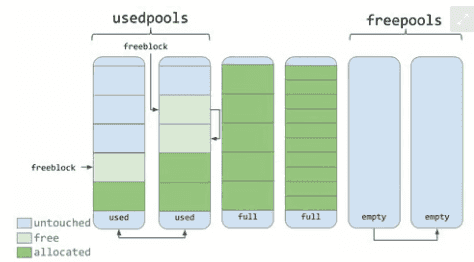

Pools are composed of blocks. Each pool maintains a double-linked list of other pools of the same size class. In that way, the algorithm can easily find available space for a given block size, even across different pools.

A usedpools list tracks all the pools that have some space available for data for each size class. When a given block size is requested, the algorithm checks this usedpools list for the list of pools for that block size.

Pools themselves must be in one of the 3 states: used, full, or empty. A used pool has some space available for data. A full pool has no space available for data. An empty pool has no space available for data and no blocks have been allocated from it.

A freepools list keeps track of all the pools in the empty state. But when do empty pools get used ?

Assume your code needs an 8-byte chunk of memory. If there are no pools in usedpools of the 8-byte size class, a fresh empty pool is initialized to store 8-byte blocks. This new pool then gets added to the usedpools list for its size class.

Blocks

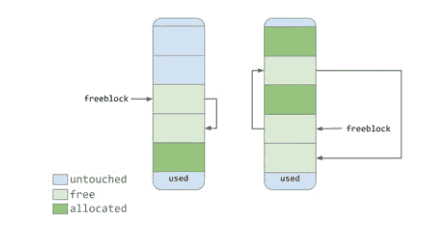

As seen in the digram above, pools contain a pointer to their "free" blocks of memory. There is a sligh nuance to the way this works. This allocator strives at all levels (arena, pool, and block) never to touch a piece of memory until it is actually needed, according to the comments in the source code.

That means that pool can have blocks in 3 states. These states can be defined as follows:

untouched: a portion of memory that has not been allocatedfree: a portion of memory that was allocated but later made "free" by CPython and that no longer contains relevant dataallocated: a portion of memory that actually contains relevant data

The freeblock pointer points to a singly linked list of free blocks of memory. In other words, a list of available places to put data. If more than the available free blocks are needed, the allocator will get some untouched blocks in the pool.

As the memory manager makes blocks "free", those now free blocks get added to the front of the freeblock list. The actual list may not be conntiguous blocks of memory, like the first nice diagram. It may look something like below:

Arenas

Arenas contain pools. Those pools can be used, full, or empty. Arenas themselves don’t have as explicit states as pools do though.

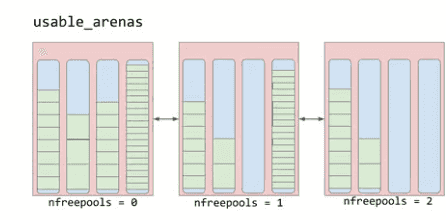

Arenas are instead organized into a doubly linked list called usable_arenas. The list is sorted by the number of free pools available. The fewer free pools, the closer the arena is to the front of the list.

This means that the arena that is the most full of data will be selected to place new data into. But why not the opposite? Why not place data where there’s the most available space?

This brings us to the idea of truly freeing memory. You’ll notice that I’ve been saying “free” in quotes quite a bit. The reason is that when a block is deemed “free”, that memory is not actually freed back to the operating system. The Python process keeps it allocated and will use it later for new data. Truly freeing memory returns it to the operating system to use.

Arenas are the only things that can truly be freed. So, it stands to reason that those arenas that are closer to being empty should be allowed to become empty. That way, that chunk of memory can be truly freed, reducing the overall memory footprint of your Python program.

Conclusion

Memory management is an integral part of working with computers. Python handles nearly all of it behind the scenes, for better or for worse.

In this article, you learned:

- What memory management is and why it’s important

- How the default Python implementation, CPython, is written in the C programming language

- How the data structures and algorithms work together in CPython’s memory management to handle your data

Python abstracts away a lot of the gritty details of working with computers. This gives you the power to work on a higher level to develop your code without the headache of worrying about how and where all those bytes are getting stored.